Y

I think I sound like an old fogey whenever I tell fresh graduates that they should be happy that AI has not replaced them yet because it means that they can still learn the old fashioned way. But it's not really a "be grateful you have a job" message, I'm also not saying that fresh grads can't flourish (since they are the digital natives) and learn how to use AI to their advantage - in fact I hope they do - it's about not getting caught in transitional periods which tend to be painful because of the lack of established precedent. If you can learn the old fashioned way in the next few years that gives you an established timeline in that you know you can build up your skills to a certain level. However, if fresh graduates are being replaced with AI, then you lose that established pathway and you need to find some way to train up.

Now back to the question of how legal tech can or cannot transform the industry. Some basic insights (which I don't fully agree with) are:

1.Know what saves you time and what does not.

The example given is - you really don't want AI to review your contracts because a lawyer still has to review the AI's work, which wastes time. Instead, focus on using AI to deal with admin work.

2. Lawyers and legal technologists should work hand in hand.

Basically, the lawyer should tell the software engineers what they need and the engineer can build a great product with great prompt engineering etc. with the lawyers' expert input.

Why I disagree

I don't fundamentally disagree with both premises. Obviously we should use AI tools in a way that actually help us and not slow us down. And that we should tap on expertise as needed.

My issue with the "AI can't replace humans" narrative is that even if it's true, the fact is that AI can replace SOME humans. If you previously needed two juniors and one senior to review a contract, an AI that is as good as the juniors can replace the juniors. There is a related issue that AI deskills because if you don't do the work, you will lose touch. My counter to that is that even before AI, seniors already rely on juniors to produce first cuts. So they are already in the reviewer role, the only difference is whether they review an AI's work or a junior human's work.

As for lawyers (or whatever industry expert it is) working with technologists, I don't dispute that, but my thinking is that it has to be driven by the industry expert and not the tech people because only the experts know what the real life workflows are, which would define the scope of the AI solution. And even in the same industry different people may have different workflows.

Where are the open source products?

My pet peeve here is that (I think) from an engineering perspective many AI solutions are easy to build and have a lot of resusable standard components. This kind of thing is exactly what open source is good for because by releasing to the public you achieve the greatest good. Proprietary solutions can bring it to the next level. But my point is that the baseline open source foundation should be there. Why? So any small firm can just load it up on their own computers and just use it without having to worry about their data going out, paying hefty subscription fees and so on.

For anyone who wants to do RAG, there is already an open source OUT OF THE BOX solution out there (RAGFlow). Extremely configurable and state of the art for 80% of all use cases. If I ever wanted to create a "document vault" I don't have to go around messing with creating my own vector database and embedding etc - I just throw the docs into a RAGFlow knowledge base and it's accessible by API call, system prompts etc all editable.

Another case in point is - I haven't been able to find an open source redlining solution even though it sounds like it would be really useful to many people and trivial from a tech perspective to build (ok, perhaps not completely trivial but any software engineer should be able to do it). Hence I have to try and build it myself which is completely not ideal. But it's an example, I think, why solutions have to be user driven, because they have the real life problems that need to be solved.

I used to think that tech bros just didn't care about building boring (but very useful) things like a redlining tool because it just isn't sexy enough. But increasingly I'm coming to think that perhaps a general tech person really just doesn't know what the market needs - usually it's someone who has some vision (like the Harvey Weinstein fella) that gives direction to a tech team. And also perhaps for those they have built platforms, there is a desire to monetize them that keeps a lot of these architectures opaque. But if you just watch a demo, it's really clear that it's not so hard. Just check out https://www.artificiallawyer.com/2025/10/06/openai-shows-off-contract-review-agent/.

And since I don't have a tech team or big law firms or VCs queuing up to give me money to assemble a tech team, I guess I have to BE that tech team too. Again, not ideal.

The beauty of open source is that you don't have to worry about someone stealing your ideas or lunch. In fact I very much wish that someone would build it already.

There also isn't (yet, to my knowledge) a true no code and user friendly component builder. Yes there's stuff like Langflow, n8n etc. but they are too lacking - not enough useful out of the box components to..Admittedly my competence level is too low to use these things properly, but my point is that you shouldn't need a high or any level of tech competence to create your own AI workflow.

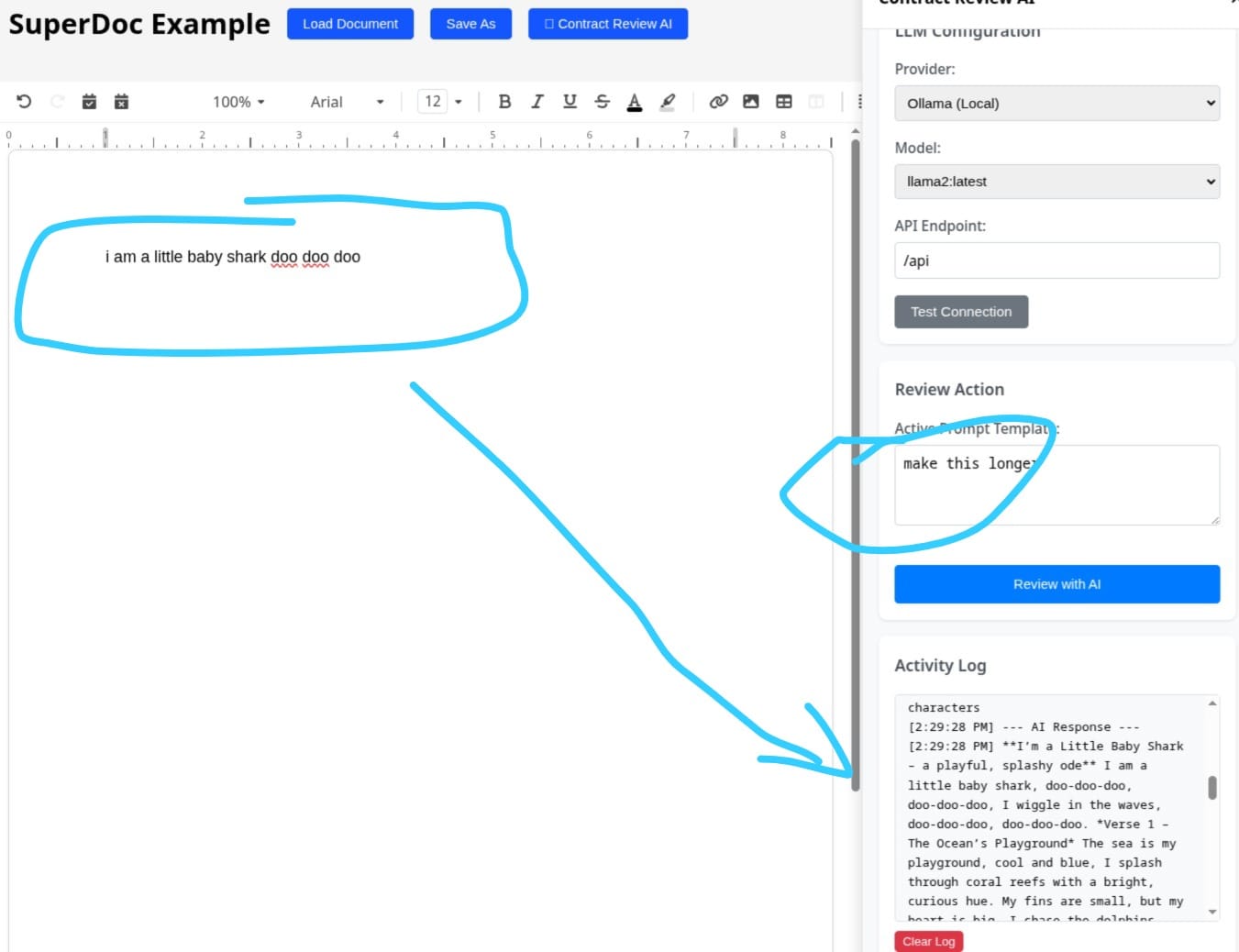

The TLDR: I'm building a custom Superdoc extension that will allow a self hosted LLM to do redlining of contract clauses. The gap in the open source market now is that there is no true redlining (a diff function). I've gotten past the "nitty gritty" (long story - would probably be trivial to a tech bro but took me a LONG time) and finally am at the point where I can work on the diff logic - basically the starting point of the real work. Wish me luck (or even better help me build it!!)