Y

Is it worth it to rent virtual machines (VMs) aka computers in the cloud? I've been running these calculations in my head since I first tried cloud gaming more than 2 years ago on GCP.

To do so we have to compare the cost of renting a VM versus the cost of buying a similar PC and the electrical costs of running it. I am interested in finding out the break even point ie how long do I have to own my PC, and how many hours a day I have to use the PC, before it is more worth it to buy rather than rent.

For this purpose I assume the system runs a RTX 5060 Ti or equivalent GPU, on a not too old CPU, running on 16GB of RAM and 512GB SSD. All prices in SGD below.

*Executive Summary*

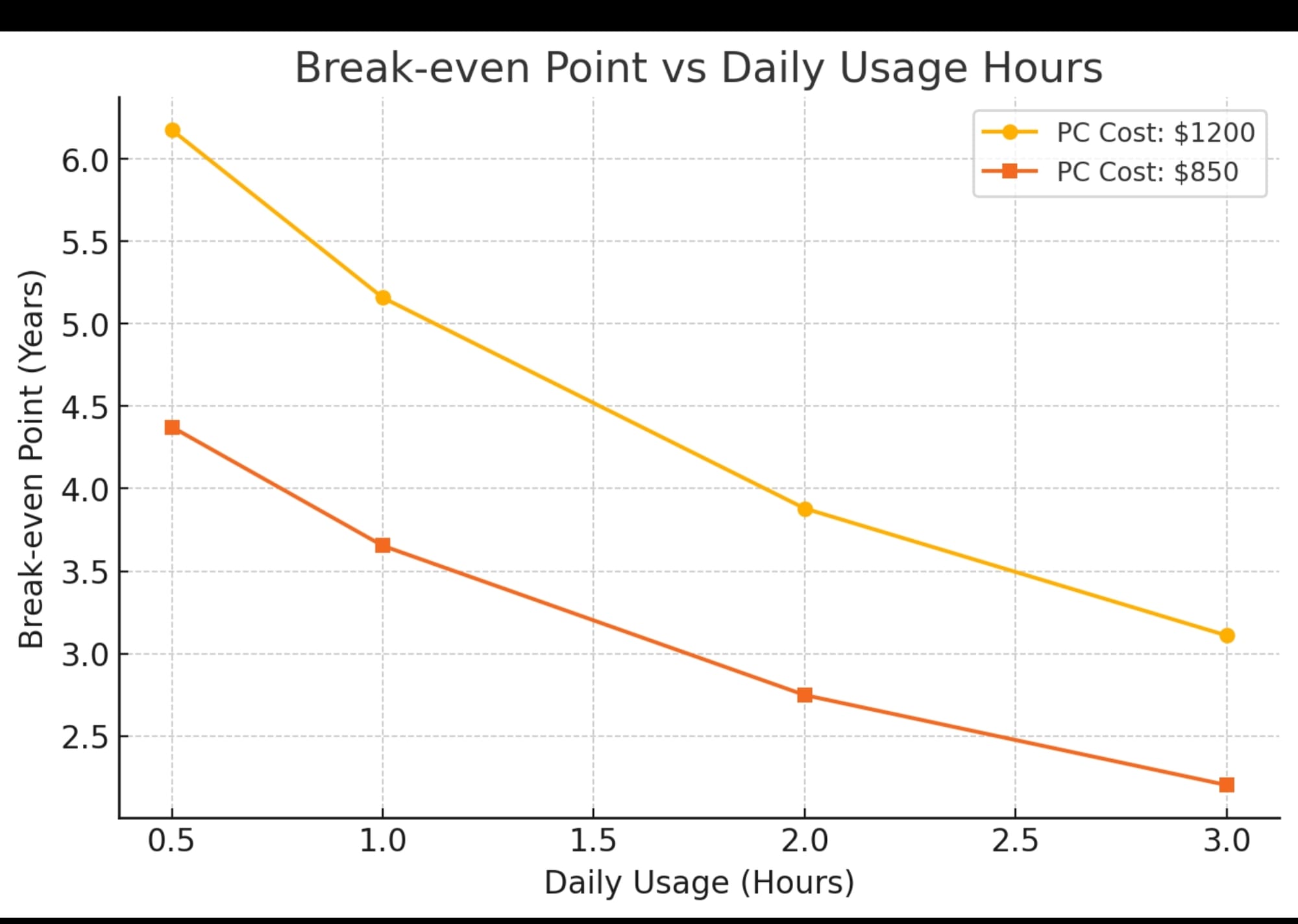

Based on ChatGPT's analysis, here are the break-even points in years for different daily usage patterns: For a $1200 PC:

0.5 hr/day → 6.18 years

1 hr/day → 5.16 years

2 hr/day → 3.88 years

3 hr/day → 3.11 years

6 hr/day → 1.95 years For an $850 PC:

0.5 hr/day → 4.37 years

1 hr/day → 3.65 years

2 hr/day → 2.75 years

3 hr/day → 2.20 years

6 hr/day → 1.38 years

One may argue that past a certain number of years it no longer makes sense to buy because the equipment would be obsolete. That's a valid point, but since a RTX 2080 (7 year old card) or even a 1080 (9 year old card) can play Baldur's Gate 3 at 1080p today in 2025, and since I don't play demanding games, even 6 years is within the usable lifespan of the RTX 5060 Ti for gaming. A more pertinent question is the viability of this GPU for AI purposes as it ages. Today there are still people running LLMs on 1080s. When I asked ChatGPT for its views it assessed a viable period of 3-5 years.

From the graph above (plotted by ChatGPT), you can see that the more you use the PC, the faster it breaks even compared to renting a cloud VM. That is the obvious point but the real issue to be discussed is, what are my true usage patterns likely to be? Ie how much am I actually going to use the system and for what purpose?

**Possible usage patterns**

The most likely frequent use case would be AI - fine tuning eventually, but running inference first. I think that's easily an hour a day. So I aim for 3.65 years - 1314 hours of run time. I would also like to do some light gaming, perhaps catch up on FF7 part 2 and part 3 and Baldur's Gate 3. That's a possible total of 150 hours of gaming, maybe more for BG3. That still leaves a thousand plus hours.

More importantly I will have to start using the PC to train or fine tune LLMs because those are unattended hours where it is most worth it to run, since my time spent in front of a PC (or a device accessing the PC) is at a premium. So one fine tuning run of a 7B model will take 5-6 days, which is about 120-144 hours. That might be recouped within 8 months or 16 months if I only run it at night.

*Manual Calculations for the record*

I did the manual calculations below mainly to act as a sanity check on ChatGPT's calculations, which unlike mine,do account for electrical costs.

**Cost of buying a PC**

There are a couple of variables involved but I will settle with a conservative cost of $1200, breakdown as follows.

1. $800 - Nvidia RTX 5060 Ti 16GB, new (selected because of high VRAM for AI purposes, new card comes with new architecture and warranty). Roughly equivalent gaming performance between a 3070 Ti and 3080.

2. $400 - cost of either getting a eGPU enclosure like Razer Core to pair with my Inten NUC 8th gen (16GB RAM, 512GB SSD) or cost of a cheapish new CPU/mobo/RAM.

Electrical costs are about 30 cents per kwH now. Let's assume a 333W load because of the efficient 5060 design, and that we run it for 1 hour a day, which is 10 cents per day ($3 a month and $36 a year). We assume a month is 30 days.

**Cost of renting a VM**

Let's use a cheaper but still reliable cloud provider. Using Tensordock as an example, I can rent a RTX A4000 (similar to 3070 Ti), 8vCPUs, 16GB RAM and 500GB SSD for 23 cents an hour. The kicker though is that even if the VM is unused you need to pay for storage costs (which makes sense because they are basically unable to use the SSD for the period you are renting it), which is about 1.8 cents per hour for 500GB and amounting to about $13 a month whether or not the VM is used. The 23 cents per hour run time cost does include the 1.8 cents per hour of storage cost, so that's 21.2 cents per hour without storage, and $6.36 a month. So, $19.36 per month and $232.32 a year.

**Calculating break even point**

The question is how many years it will take before it is worth it to buy a PC instead of renting. For that we take the cost of the PC divided by the cost of renting a year. For this example I will assume the electrical costs are negligible.

$1200 divided by $232.32 a year makes 5.3 years.

If we play around with the variables and I can get my PC for $850 instead ($750 MSRP of 5060 Ti plus a used Razer Core X for $100), that brings the break even point down to 3.6 years.